To render anomaly-free scenes in OpenGL that have translucent objects, we cannot render objects in any arbitrary order as we have been able to do to this point. At a minimum, we would have to try to sort translucent objects based on distance from the eye (i.e., based on z-coordinates of vertices in the eye coordinate system) during each display callback. If there were large numbers of translucent objects, this sorting could easily destroy interactivity.

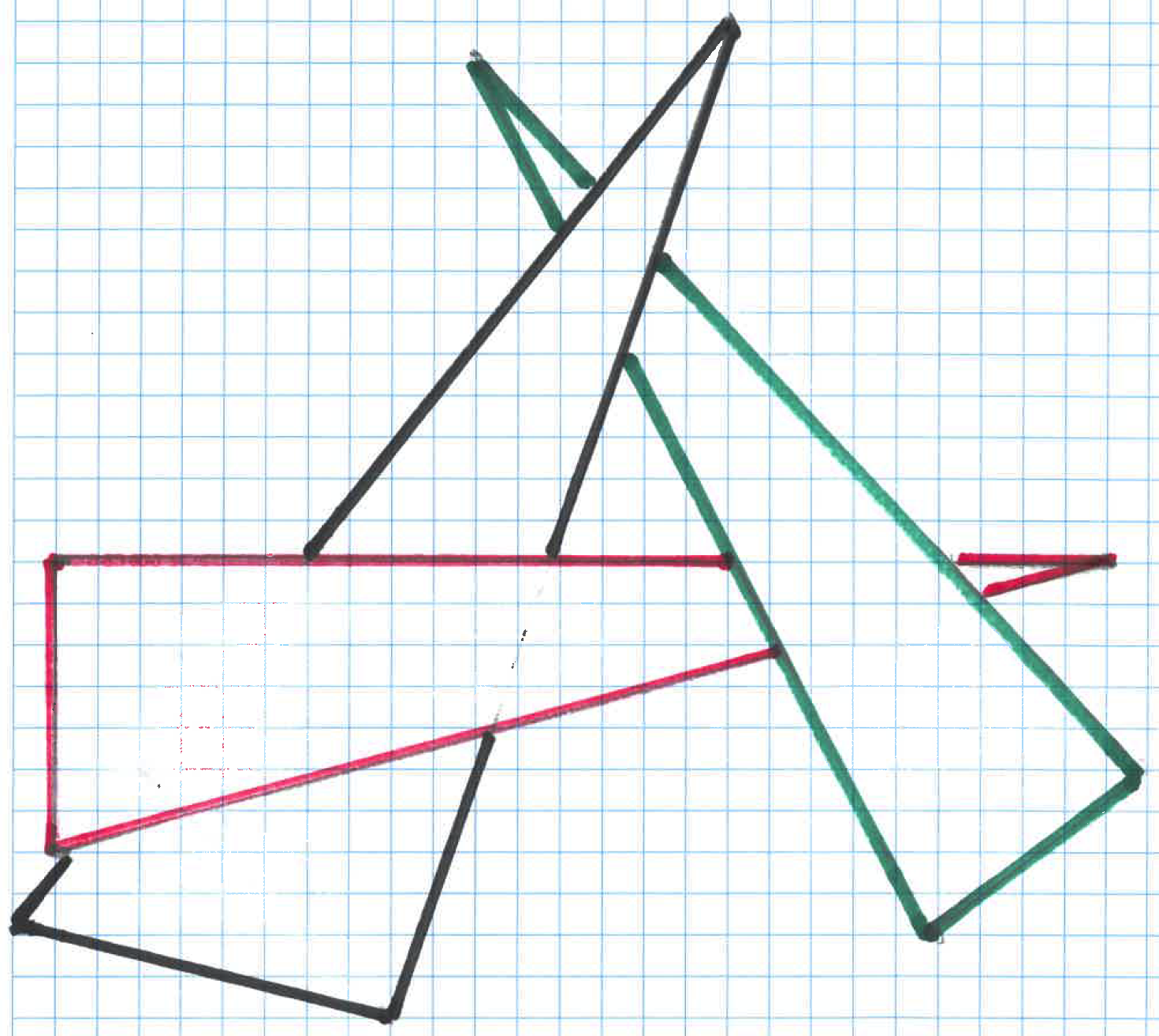

Worse yet, the general problem of depth sorting a collection of triangles is not well-defined. For example, how would you sort two triangles, each of which has three different eye z-coordinates and whose resulting z ranges overlap? In fact, it turns out that it is possible for three or more triangles to overlap in the eye z-coordinate direction in such a way that no sorting order is possible.

As an alternative to this expensive and ill-defined problem, we will study simpler approaches that do not require sorting polygons at all and often yield acceptable results. While they are simple and adequate for our purposes, it is very easy to demonstrate their shortcomings. For example, given two translucent polygons, A and B, an approach that does not sort based on distance from the eye in each frame will render A and B in the same order during every display callback. As a result, we will see the same color in any part of the screen where they overlap, regardless of which is closer to the eye at that time. Nevertheless, these approaches generally produce reasonable displays, provided such areas of overlap are not visually dominant. If they are, then at least some limited per-frame sorting of translucent polygons will be required to achieve satisfactory results.

The actual color blending process occurs in the Framebuffer operations portion of the pipeline after the fragment shader executes. Hence there is a larger reliance on OpenGL calls (as opposed to GLSL shader code we write) to specify what is to be done. Specifically, we will use:

At a high level, approaches such as we are about to study require the following general CPU side handling of display callbacks:

This can be implemented in an overridden handleDisplay method as follows (assume "class ExtendedController : public GLFWController", and assume there is a "bool drawingOpaqueObjects" instance variable in class ExtendedController):

void ExtendedController::handleDisplay()

{

prepareWindow(); // an inherited GLFWController method needed for some platforms

glDepthMask(GL_TRUE);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

// draw opaque objects

glDisable(GL_BLEND);

drawingOpaqueObjects = true; // record in instance variable so ModelView instances can query

renderAllModels();

// draw translucent objects

glDepthMask(GL_FALSE);

glEnable(GL_BLEND);

glBlendFunc(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA);

drawingOpaqueObjects = false; // record in instance variable so ModelView instances can query

renderAllModels();

swapBuffers();

}

// The following must a public method in class ExtendedController:

bool ExtendedController::drawingOpaque() const // CALLED FROM SceneElement or descendant classes

{

return drawingOpaqueObjects;

} |

Given this general approach to handling display callbacks, there are at least two possible implementation approaches for rendering ModelView/SceneElement instances. I recommend the "(Nearly) pure GPU approach" for your 672 projects because it is easier, but it is important to understand the tradeoffs, so we will study both.

Hybrid approaches possible. For example, if large, easily identifiable and separable portions of the scene are opaque, draw them first with "sceneHasTranslucentObjects" set to zero. Then fall into the GPU approach.

Regardless of which approach is used, you will need to add a new uniform variable to the other material properties. For example:

The complete set of uniform material properties will then be: ka, kd, ks, m, and alpha. The first three are of type vec3; the last two are of type float. These properties are encapsulated in the PhongMaterial class.

Translucency using the schemes described here interacts with other functionality. For example: